GMP Annex 22: AI Regulation and the Future of Pharma Manufacturing

Discover how EU GMP Annex 22 shapes the future of AI in pharmaceutical manufacturing and what’s next for dynamic and generative models.

Carl Bufe

10/15/20256 min read

Annex 22 and Beyond

The draft publication of EU and PIC/S GMP Annexe 22 represents a turning point in pharmaceutical manufacturing—the first formal recognition that artificial intelligence has graduated from experimental novelty to regulated reality. While the annex establishes clear boundaries for static, deterministic AI models in critical GMP applications, it simultaneously opens a conversation about what lies beyond these initial guardrails.

For innovators, R&D leaders, and digital quality strategists, Annex 22 is not the destination but rather the starting line for AI's evolving regulatory journey in pharmaceutical manufacturing.

Current Foundations: Where Annex 22 Draws the Line

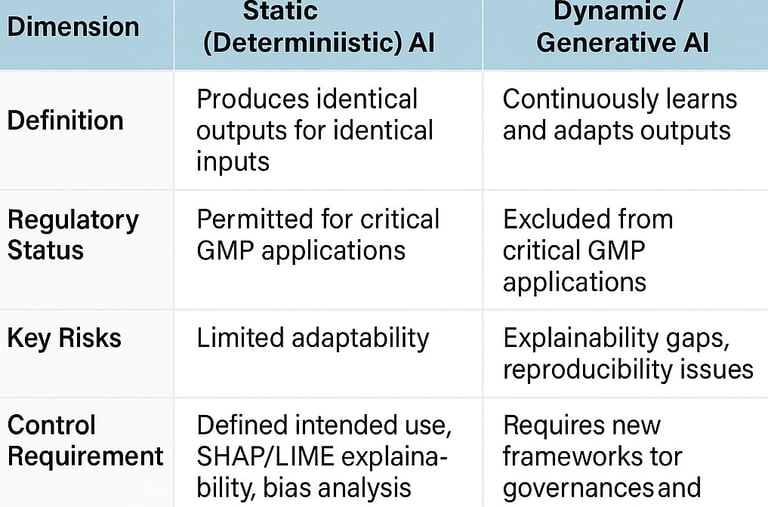

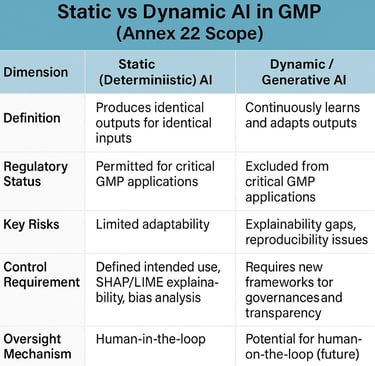

Annex 22's scope reflects deliberate regulatory caution, rooted in today's technological realities. Static AI models—those that produce identical outputs for identical inputs—receive regulatory approval for critical GMP applications. Dynamic models, generative AI, and large language models remain explicitly excluded from processes directly impacting patient safety, product quality, or data integrity. This boundary acknowledges fundamental limitations: explainability gaps, reproducibility challenges, and the inability to guarantee consistent outputs that GMP demands.

Yet even within these constraints, the annex demonstrates regulatory sophistication. Requirements for intended use definitions, bias analysis, feature attribution using tools such as SHAP and LIME, confidence scoring, and continuous performance monitoring establish a comprehensive lifecycle framework. The emphasis on "human-in-the-loop" oversight for non-critical applications signals regulatory understanding that AI adoption exists on a spectrum, not as a binary permission or prohibition.

The Regulatory Horizon: Dynamic and Generative AI in GMP

The exclusion of dynamic and generative AI from critical GMP applications is practical, not permanent. Regulatory frameworks worldwide are advancing rapidly, with technology often outpacing policy. The FDA is actively developing AI-specific regulatory frameworks that emphasise transparency, explainability, governance, and bias mitigation. International bodies like ICH are integrating AI considerations into Model-Informed Drug Development guidelines, standardising terminology and validation criteria across jurisdictions.

Explainability emerges as the critical bridge between today's static models and tomorrow's dynamic systems. Technologies advancing explainable AI—particularly real-time patient insights, bias explorers, feature importance displays, and drift monitoring—are transforming opaque "black boxes" into transparent, auditable systems. Healthcare organisations deploying explainability frameworks report accelerated adoption, reduced training friction, and enhanced clinical confidence. As these capabilities mature, the technical barriers preventing dynamic AI from being used in critical GMP contexts may dissolve.

Regulatory sandboxes represent another promising pathway forward. These controlled environments allow regulators and innovators to test AI tools collaboratively, with reduced bureaucratic friction but maintained safeguards. The US White House AI Action Plan explicitly champions sandboxes to streamline approval processes while preserving oversight. Similar models could enable pharmaceutical manufacturers to validate dynamic AI systems under regulatory observation, building evidence portfolios that support eventual broader approval.

Large Language Models: Responsible Innovation in Non-Critical Workflows

While Annex 22 prohibits LLMs in critical GMP operations, it acknowledges their potential for non-critical tasks—provided human oversight remains integral. This opening creates substantial opportunity for pharmaceutical organisations to harness generative AI responsibly across documentation drafting, training content generation, regulatory intelligence synthesis, protocol template creation, and project management support.

The pharmaceutical industry is already proving LLMs' value in accelerating the composition of technical and scientific documents, reducing timelines from weeks to days. Companies applying generative AI to non-GMP workflows report efficiency gains while maintaining quality through structured human review processes. The key lies in architecture: human-in-the-loop models that augment rather than replace human judgment.

However, emerging research challenges the assumption that human-in-the-loop approaches are universally optimal. Some experts advocate for "human-on-the-loop" models—allowing AI to perform tasks autonomously while humans retain supervisory control from a distance. This approach prevents cognitive deskilling, improves scalability, and maintains accountability. As LLM capabilities advance and explainability improves, pharmaceutical organisations may transition from rigorous human verification of every AI output to exception-based oversight, freeing qualified personnel for higher-value work.

Industry-Regulator Collaboration: Co-Creating the Future

The evolution from Annex 22's initial framework to broader AI integration demands clear collaboration between regulators and industry innovators. Recent developments illustrate this collaborative momentum. The UK's MHRA launched a National AI Commission, including international experts from organisations such as the Coalition for Health AI, Google, and Microsoft, to shape safe, transparent AI use in healthcare. The FDA and MHRA are strengthening transatlantic alignment on AI regulation, identifying strategic opportunities and accelerating joint initiatives.

Multidisciplinary engagement proves essential. Pharmaceutical companies deploying AI in manufacturing environments recognise that successful implementation requires quality assurance professionals, data scientists, IT specialists, subject matter experts, and regulatory affairs managers working in concert. Trade associations like EFPIA are actively supporting regulators in developing concrete positions on AI in GMP manufacturing, emphasising that existing computerised system validation frameworks provide robust foundations for managing AI-specific risks.

This collaborative approach extends to standards development. Organisations are investing heavily in educational initiatives, harmonised terminology (as reflected in Annex 22's glossary defining SHAP, LIME, overfitting, and related concepts), and shared best practices for transparency, explainability, governance, and bias mitigation. The goal is not merely regulatory compliance but the cultivation of trust—among regulators, manufacturers, healthcare providers, and ultimately patients.

Preparing for 2026 and Beyond

With Annex 22's effective date approaching in 2026, pharmaceutical organisations face immediate implementation imperatives alongside longer-term strategic positioning. Early adopters establishing AI governance frameworks, model lifecycle management protocols, and compliance monitoring systems will gain competitive advantages as regulatory expectations mature.

The challenges are substantial but navigable. Data quality and availability concerns, integration complexities, skilled talent shortages, regulatory compliance navigation, and demonstrating rapid ROI all pose barriers to AI adoption. Yet the pharmaceutical industry's experience managing computerised system validation, data integrity requirements, and GxP compliance provides proven methodologies for addressing AI-specific risks.

Looking forward, several trends will shape AI regulation in pharma. Risk-based approaches will continue to differentiate between AI applications with minimal impact (e.g., scheduling optimisation) and those with critical impact (e.g., batch release decisions), with scrutiny proportional to the patient safety implications. Continuous post-market monitoring tailored to AI characteristics—such as performance drift, dataset shift, and evolutionary learning—will become standard practice. Regulatory frameworks will increasingly accommodate learning systems through change management protocols, distinguishing minor refinements from modifications warranting additional review.

Embracing the Journey

Annex 22 represents some regulatory wisdom that establishes clear rules for what we understand today while acknowledging the provisional nature of those boundaries. Dynamic AI models excluded from critical GMP applications in 2025 may become routine by 2030 or earlier as explainability advances, validation methodologies mature, and collaborative evidence accumulates. Generative AI supporting non-critical workflows today may expand into quality-adjacent functions as human-in-the-loop architectures prove their reliability.

For pharmaceutical innovators, R&D leaders, and digital quality strategists, the path forward demands both discipline and imagination.

The implementation of Annex 22 requirements builds regulatory credibility and organisational capability. Simultaneously, engagement with emerging explainability technologies, participation in regulatory sandboxes, contribution to industry-regulator dialogues, and investment in workforce development position organisations to lead as regulatory ambassadors.

We believe the future of AI in pharmaceutical manufacturing will be written collaboratively—by regulators committed to patient safety, innovators driven by efficiency and insight, and professionals dedicated to responsible integration of transformative technologies.

Annex 22 is the prologue. The chapters and updates ahead promise to be extraordinary and exciting.

References

1) European Commission Health. (2025). Annex 22: Artificial Intelligence - Draft Consultation Document. https://health.ec.europa.eu/document/download/5f38a92d-bb8e-4264-8898-ea076e926db6_en

2) PIC/S. (2025, July 6). Publications. https://picscheme.org/en/publications

3) GMP Compliance. (2025, July 8). Drafts of EU GMP Guideline Annex 11, Annex 22 and Chapter 4 released for comment. https://www.gmp-compliance.org/gmp-news/drafts-of-eu-gmp-guideline-annex-11-annex-22-and-chapter-4-released-for-comment

4) McKinsey & Company. (2024, January 8). Generative AI in the pharmaceutical industry: Moving from hype to reality. https://www.mckinsey.com/industries/life-sciences/our-insights/generative-ai-in-the-pharmaceutical-industry-moving-from-hype-to-reality

5) NSF. (2025, July 20). Revised EU- PIC/S GMP on Documentation, Computer Systems and AI. https://www.nsf.org/life-science-regulatory-news/revised-eu-pic-s-gmp-on-documentation-computer-systems-and-ai

6) EFPIA. (2024, September). Position Paper: Application of AI in a GMP / Manufacturing environment. https://www.efpia.eu/media/vqmfjjmv/position-paper-application-of-ai-in-a-gmp-manufacturing-environment-sept2024.pdf

7) EMA. (2024, December 5). Generative AI in Pharmaceutical Manufacturing: Use Cases and Impact. https://www.ema.co/additional-blogs/addition-blogs/generative-ai-in-pharmaceutical-manufacturing-use-cases-and-impact

8) Pharmaceutical Technology. (2025, May 18). Pharma's AI prospects get nudged into the future with EU's AI act. https://www.pharmaceutical-technology.com/features/pharmas-ai-prospects-get-nudged-into-the-future-with-eus-ai-act/

9) European Commission Health. Good Manufacturing Practice Guidelines: Chapter 4, Annex 11 and Annex 22 Stakeholder Consultation.

10) UK Government. (2025, October 7). Patients to benefit as UK and US regulators forge new collaboration on medical technologies and AI. https://www.gov.uk/government/news/patients-to-benefit-as-uk-and-us-regulators-forge-new-collaboration-on-medical-technologies-and-ai

11) US FDA. (2025, March 24). Artificial Intelligence in Software as a Medical Device. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-software-medical-device

12) ScienceDirect. (2025). Advancing explainable AI in healthcare: Necessity, progress, and challenges. https://www.sciencedirect.com/science/article/pii/S1476927125002609

13) DIA Global Forum. (2025, June 5). AI in Drug Development: Clinical Validation and Regulatory Innovation are Dual Imperatives. https://globalforum.diaglobal.org/issue/june-2025/ai-in-drug-development-clinical-validation-and-regulatory-innovation-are-dual-imperatives/

14) FDLI. (2025, August 19). Regulating the Use of AI in Drug Development: Legal Challenges and Compliance Strategies. https://www.fdli.org/2025/07/regulating-the-use-of-ai-in-drug-development-legal-challenges-and-compliance-strategies/

15) Nature Medicine. (2025). For trustworthy AI, keep the human in the loop. https://www.nature.com/articles/s41591-025-04033-7#:~:text=One%20solution%20to%20ensuring%20transparency,implemented%20in%20the%20loop%20matters.

16) ScienceDirect. (2025). Regulatory sandboxes: A new frontier for rare disease therapies. https://www.sciencedirect.com/science/article/pii/S2950008725000547

© 2025. All rights reserved.